The app is constantly trying new tools and strategies in an effort to break repetitive patterns.

“Unfortunately, just like Facebook, Instagram, and other social networks, in practice, this means that the most shocking and emotionally jarring content is the stuff that’s served most often,” Jon Brodsky, CEO at social network YouNow, told . “This is the same story we’ve seen several times before, and unfortunately, it’s almost impossible to get people emotionally invested in less shocking content. The only difference between TikTok and Facebook is the speed at which people are being served jarring content.”

Baruch Labunski, founder at SEO firm Rank Secure, agreed. “It’s hard to believe that TikTok has suddenly developed a conscience and cares about the health and wellbeing of its users,”He said. “Everything in their algorithm is designed to bring users back and keep them on the platform longer.”

For one, experts agree that this experiment seems more like the company’s PR maneuver aimed at appeasing regulators and critics in response to recent scrutiny and less a move that will actually help protect users from addictive, harmful content.

Researchers and legislators are expressing concern about TikTok’s ability to send users into a vicious circle of negative or harmful content. This could include self-harm or depression, or content on eating disorders. Users’ mental health could be affected by being exposed to these types of content. According to the Mental Health Foundation, overuse of social media can lead to loneliness, self-worth loss, anxiety and depression.

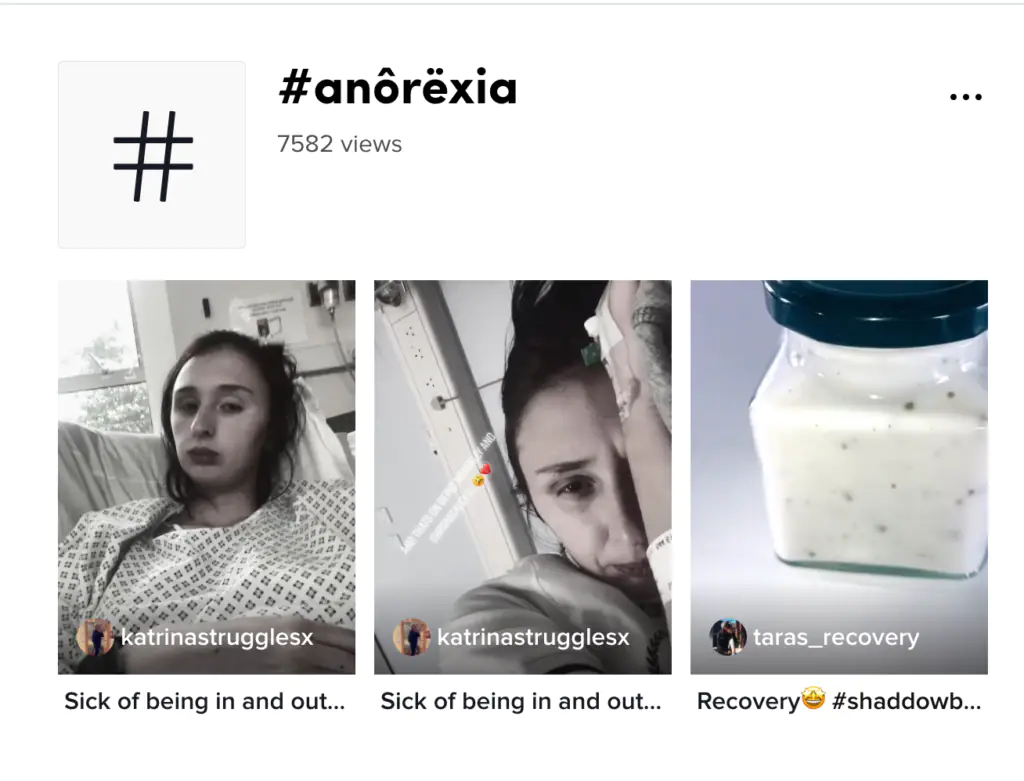

You can also simply ignore the changes by simply using an altered spelling of the negative or harmful content. It’s a workaround all the social media platforms deal with in content moderation — underage users can enter a false age, and targeted groups can keep changing their names or hashtags as the rules evolve.

“We’re currently testing ways to further diversify recommendations, while the tool that will let people choose words or hashtags associated with content they don’t want to see in their For You feed will be testing in the near future,” spokesperson Jamie Favazza told .

TikTok didn’t respond to any further inquiries.

Part of what makes TikTok so successful is the addictiveness driven by its algorithm, which learns a user’s interest to serve up similar content and judge their behavior based on how long they lingered or rewatched a piece of content, for example. Although it works the same as other social media, TikTok seems to be more able to take this feedback and integrate it into its content much faster than its peers.

TikTok, a social app that has been around for five years, is one of the most popular among Gen Z users. Throughout 2021, TikTok’s growth outpaced its competitors as it hit 1 billion users last September. Facebook took just eight years to reach 1 billion users. AccordingBuyShares, an insight company

The popular app is under increasing pressure and the company has had to rethink its features. In December, the platform announced that it would. Implement tools now “diversify”The For You page is a feed that gives users endless content based on their interests. This app has also been criticised for spreading misinformation such as anti-vaccination messaging and white supremacy content.

After TikTok’s testimony in Congress in October, in a panel focused on consumer safety and online protection, the company made this move. Recent testimony from executives of YouTube, Instagram and Snap regarding online safety has been made by representatives of YouTube, Snap, Instagram, Snap, Snapchat, and Instagram. As Instagram has recently come under fire for downplaying its research showing that its products have a negative impact on teenage users, lawmakers are increasingly putting these companies on the hot seat — but some are doubtful that this will get these businesses to change their ways.

TikTok, a Chinese tech company ByteDance claimed it does not recognize too many of one thing. “fit with the diverse discovery experience we aim to create.”TikTok stated that it will try to combat this by allowing users to make recommendations and occasionally feed them content that is not their preference.

“As we continue to develop new strategies to interrupt repetitive patterns, we’re looking at how our system can better vary the kinds of content that may be recommended in a sequence. That’s why we’re testing ways to avoid recommending a series of similar content – such as around extreme dieting or fitness, sadness, or breakups – to protect against viewing too much of a content category that may be fine as a single video but problematic if viewed in clusters,”The Post continued.

The question of whether social networks have the social responsibility to protect users from the adverse effects of their products is a topic of ongoing debate. As Will Eagle, VP of marketing and strategy of digital talent network Collab, points out, users may not always do what’s best for themselves — and tech companies also risk imploding their platforms by disregarding these content moderation issues.

“TikTok knows that there is a responsibility component that can (and should) play a role within the algorithm,” Eagle said. “Could they ignore it? Sure, but it would ultimately destroy the platform from within. The question on the table is, to what extent can the algorithm reinforce negative experiences?”

Other content such as content that shows the use of controlled substances or graphic content in a potentially dangerous stunt are also allowed. Excluded from the For You recommendation program. TikTok claimed it removed content that promoted or showed eating disorders, dangerous actions and challenges, reckless driving, and other such issues. Users can also tap “not interested” if they are served content they don’t want, a feature Eagle recommends in order to get out of a potentially negative or harmful vortex in the app.

According to the company, it is working with experts from medicine, AI ethics, and clinical psychology as the tools are developed. TikTok did not specify when it would introduce the tool that will allow people to choose words or hashtags associated with content they don’t want to see in their For You feed.

So far, some are seeing that users can get around TikTok’s diversification efforts by alternating spelling and adding accent marks to some of the topics being tracked. Flynn Zaiger is the CEO of Online Optimism social media agency. He gave examples of users writing. “anôréxîâ”Instead, instead, while “anorexic”The National Eating Disorder Association would refer you to support if the marks were not present.

“TikTok’s attempts to diversify the feed, three weeks in, has just led to individuals to come up with new ways to continue to share similar content, no matter the danger, using different words and euphemisms to get around the algorithms,” Zaiger said.

Zaiger believes that the modification of this text will not be sufficient to prevent the proliferation and spread of harmful content. This is how social media companies make their money and remain competitive. He said: “TikTok’s initial announcement felt timed to antagonize and attempt to separate themselves from the negative stories coming out about Instagram.”